|

TopNax |

Adding Value Through Anti-Aliasing, Eyefinity, And Video |

|

A Suite Of Sweet Outputs When it comes to gaming, I go with whichever company shows me the best performance, least amount of noise, and highest degree of reliability. But I’ve been running AMD graphics for more than a year now because of Eyefinity. Before that, I used an Nvidia Quadro NVS card in my workstation, requiring a second machine for gaming. While I was able to use four LCDs on the Quadro NVS, I had to scale back to three when I popped in a Radeon HD 5850. Now, with the Radeon HD 6800- and 6900-series cards, it’s possible to swap back to four. The 6970 and 6950 sport the same five display outputs as the two 6800-series boards: two mini-DisplayPort 1.2 connectors, an HDMI 1.4a port, and two DVI outputs (one single-link and the other dual-link). From those five, you can use a combination of four as independent outputs, so long as two of them are DisplayPort.

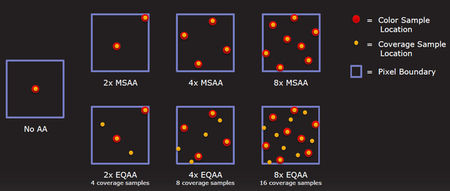

In the future, it’ll actually be possible to attach six displays, eradicating the need for a special, more expensive Eyefinity 6 Edition board. Using DisplayPort 1.2, you can daisy-chain or connect a multi-stream transport hub, supporting the sextet of screens from the two mini-DP ports. That won’t happen until we’re well into 2011, though. Just something to keep in mind. AMD Gets CSAA With the Radeon HD 6800-series boards, AMD picked up morphological anti-aliasing, a DirectCompute-based post-processing filtering technique that enables AA even in games with no native AA support. This time around, the company is adding Enhanced Quality Anti-Aliasing. EQAA basically improves existing multi-sampling anti-aliasing modes with up to 16 coverage samples per pixel.

Understanding what that means requires a couple of steps backward. When you apply supersampling to an image, each “sample” represents shaded color, stored color/z/stencil, and coverage. In essence, this is the equivalent of rendering to an oversized buffer and downfiltering. Multi-sampling helps reduce the performance hit of this intensive operation by decoupling the shaded samples from color and coverage. The process works with fewer shader samples, while compromising nothing on the color/z/stencil and coverage sampling. EQAA, which looks to be equivalent to Nvidia’s CSAA, decouples coverage from color/z/stencil, cutting the memory bandwidth costs and therefore leaving a much smaller performance footprint. Now, AMD believes it has more control over the relationship between color to coverage samples than Nvidia. So, while the implementation is roughly equivalent now, the company is looking at ways to expose its advantage in the future. You Vee Dee Three The Radeon HD 6900s sport the same UVD 3 fixed-function hardware enabled on the 6800s. That means acceleration of MPEG-4 ASP and more of the MPEG-2 pipeline (relatively minor additions), plus the addition of Multiview Video Coding, an amendment to H.264/MPEG-4 AVC facilitating stereoscopic playback. In essence, we’re talking about hardware-based support for 3D Blu-ray over HDMI 1.4a. This helps catch AMD up to Nvidia. However, Intel has something waiting in the wings that’ll take both graphics companies by surprise. In a couple of weeks, we’ll be able to tell you more. |

PowerTune: Changing The Way You Overclock |

|

Then Over time, AMD and Nvidia have integrated specific capabilities to help their hardware cope with the rigors of taxing applications and then gracefully scale back when the load isn’t as high. Under extreme duress, usually in a piece of software like FurMark specifically written to apply atypically-intense workloads, both companies are able to throttle voltages and clock rates to protect against an unsustainable thermal situation. Additionally, they’ve incorporated protection mechanisms for voltage regulator circuitry that’ll also drop GPU clocks if an overvoltage occurs, even before the graphics processor heats up.

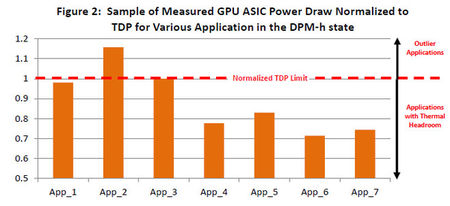

At the other end of the spectrum, Radeon and GeForce boards spin down when they’re not being taxed. This translates to significant power savings (not to mention better thermal and acoustic properties). The flexibility to scale up and down like this is what makes it possible to drop a desktop-class piece of silicon into a notebook and still end up with a useable system. AMD’s suite of power management technologies has, for many generations, gone by the name of PowerPlay (apropos, given ATI’s origins in Toronto). It’s most well-known on the mobile side, because that’s where specific thermal considerations most affect what a given GPU can do. But PowerPlay is fairly rudimentary in the grand scheme of things. It supports an idle state, a peak power state, and intermediate states for things like video playback. However, each voltage/frequency combination is static, like rungs on a ladder. The problem is that applications don’t all behave the same way. So, even if a GPU is in its highest performance state, a piece of software like FurMark might trigger 260 W of power draw, while an application like Crysis pushes the card to consume 220 W. If you’re designing a graphics board, you can’t set the clocks and voltages with Crysis in mind; you have to make sure it’s stable in FurMark, too. That sort of worst-case combination of factors is what goes into the thermal design power we so often cite in our reviews. A company like AMD or Nvidia defines a thermal design power for an entire graphics card, representing maximum power draw for reliable operation of the GPU, voltage regulation, memory, and so on. When they set the voltage and clock rate for that top P-state, three things are being taken into consideration: the TDP, the highest stable frequency at a given voltage, and the power characteristics of applications, which end up determining draw under full load, since some push hardware much harder than others.

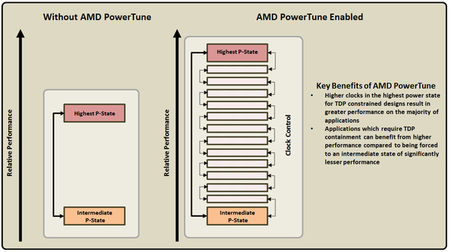

Now, in some cases, you might have to artificially cap a GPU’s performance to get it to fit within a given power envelope--this is particularly common in notebooks. Similarly, it might become necessary to limit clock speed to fit within the PCI Express specification, for example. Sadly, the result could be that you limit the performance of World of Warcraft because you have to cap clocks with 3DMark Vantage’s Perlin Noise test in mind, preventing instability when that test runs. Suddenly, it makes a lot more sense as to why both GPU manufacturers hate programs like FurMark and OCCT so much. Those "outlier" apps, as they call them, artificially hobble what their cards can do. At the end of the day, you have this situation where graphics cards are protected from damage. But the protection mechanism hammers performance in the name of safety. And if you’re running an application that doesn’t reach the board’s power limit, then you wind up leaving it underutilized—that’s performance left on the table. Now AMD claims that its PowerTune technology addresses both “power problems” that GPU vendors face through dynamic TDP management. Instead of scaling up and down static power states, PowerTune dynamically calculates a GPU engine clock based on current power draw—right up to its highest possible state. Should you dial in an overclock and run an application that pushes the card beyond its TDP, PowerTune is supposed to keep the GPU in its highest P-state, but cut back on power use by dynamically reducing clock speed.

This is not to say that PowerTune will prevent you from crashing if you get too aggressive on your overclock. We tried upping the Radeon HD 6970’s clocks in AMD’s Catalyst Control Center software, keeping PowerTune at its factory setting, and still managed to get Just Cause 2 and Metro 2033 to crater. Also, it’s worth noting that actually using PowerTune is akin to overclocking. Should your shiny new 6000-series card’s death turn out to be PowerTune-related, a warranty won’t cover it. Sounds a little like Nissan equipping its GT-R with launch control, and then denying warranty claims when someone pops the tranny. Nevertheless, you’ve been warned. How does PowerTune help performance? Well, rather than designing the Radeon HD 6000s with worst-case applications in mind, AMD is able to dial in a higher core clock at the factory (880 MHz in the case of the 6970) and rely on PowerTune to modulate performance down in the applications that would have previously forced the company to ship at, say, 750 MHz. How It Works So, let’s say you’re overclocking your card, leaving PowerTune at its default setting in AMD’s driver. If you run an application that wasn’t TDP-constrained by the default clock, and still isn’t constrained by the higher clock, you’ll see the scaling you expected. If the application wasn’t TDP-limited before the overclock, but does cross that threshold afterward, you’ll realize a smaller performance boost. Finally, if the application was already pushing the card’s TDP, overclocking isn’t going to get you anything extra—PowerTune was already modulating performance.

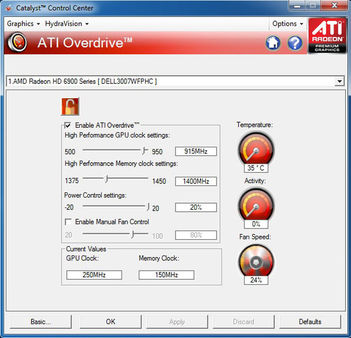

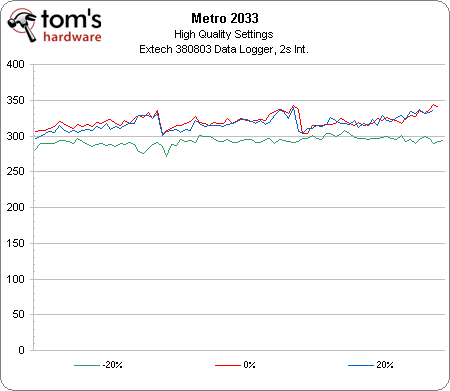

AMD gives you a way around this, though. In the Catalyst Control Center, under the AMD Overdrive tab, there’s a PowerTune slider that goes from -20% to +20%. Sliding down the scale reins in maximum TDP, helping you save energy at the cost of performance. Moving the other direction creates thermal headroom, allowing higher performance in apps that might have been capped previously. In order to test this out, we fired up a few games to spot check PowerTune’s behavior, eventually settling on Metro 2033—the same app we use to log power consumption later in this piece. We also dialed in a slight overclock on our Radeon HD 6970 (915/1400 MHz). With the slider set to -20%, we saw 48.54 frames per second at the game’s High detail setting. At the default 0%, performance jumped to 56.43 FPS. At +20%, performance increased slightly to 57.33 FPS.

The logged power chart tells the tale. By dropping the PowerTune slider, it’s clear that the capability pulls down peak power use (the difference is about 27 W average). But because Metro is already using a lot of power, there isn’t any headroom left for the card to drive extra performance. In a sense, AMD has already extracted much of the overclocking headroom you might have otherwise pursued in order to make thee 6900-series cards more competitive. You can use the PowerTune slider to make more headroom available, but at the end of the day, the gains you see will be application-dependent. AMD says that PowerTune is a silicon-level feature enabled by counters placed throughout the GPU. It works in real-time without relying on driver or application support. It’s programmable, too, so you can expect it to make a reappearance when Cayman is turned into a mobile part called Blackcomb. |

|

Source: AMD. The difference is more granularity |

|

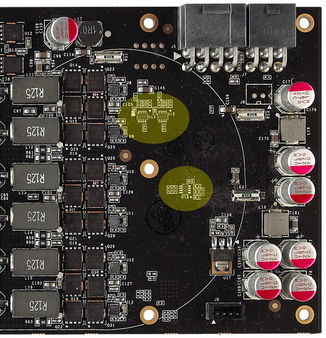

Power-monitoring circuitry added to GeForce GTX 580 |

Meet Radeon HD 6970 And Radeon HD 6950 |

|

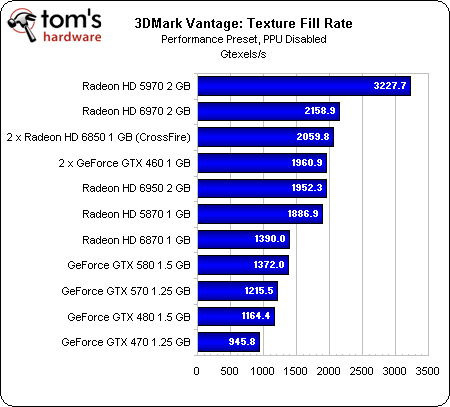

AMD’s Cayman currently appears on two different graphics cards: Radeon HD 6970 and Radeon HD 6950. Both boards use the same GPU in slightly different configurations. The flagship features Cayman in its unadulterated form, with all 24 SIMD engines turned on, along with 96 associated texture units. In addition to the full complement of Cayman’s specs, the 6970 also runs at an elevated 880 MHz clock rate. As with previous architectures, AMD employs a 256-bit memory bus. This time around, however, the company’s first shipping boards feature 2 GB of GDDR5 memory. This is particularly notable in light of the fact that it’s using ICs capable of 1375 MHz on the Radeon HD 6970, yielding a 5500 MT/s data rate and up to 175 GB/s of bandwidth. Nvidia gets more throughput using slower ICs, but at the expense of a wider memory bus, resulting in a larger GPU and more PCB traces.

AMD says that, at idle, the Radeon HD 6970 will scale all the way down to 20 W. That’s 7 W lower than the Cypress-based Radeon HD 5870. The flagship card also sports a PowerTune (effectively its TDP) maximum power of 250 W. In comparison, the Radeon HD 5870 has a 188 W maximum active power. Uh oh. Didn’t Nvidia’s GeForce GTX 480 also have a 250 W peak thermal design figure? As you’ll see on the power consumption page, AMD doesn’t come anywhere near GeForce GTX 480 levels, though. More typical in gaming situations, the company says, is a roughly 190 W. The Radeon HD 6950 sports the same Cayman GPU, but it loses two of the SIMD engines, yielding 1408 total ALUs (22 SIMDs * 16 thread processors per engine * 4 ALUs per thread processor). It also gives up four texture units per SIMD engine, dropping the total from 96 to 88. AMD doesn’t alter the chip’s back-end, delivering the same 32 ROPs found on Radeon HD 6970, and indeed the Cypress-based Radeon HD 5870 as well. Additionally, we’re looking at the same 2 GB frame buffer at first, though AMD says board partners are already working on 1 GB versions, too. Clock rates do drop, though. The Radeon HD 6950’s core runs at 800 MHz and its memory is slowed to 1250 MHz, yielding a 5000 MT/s data rate and up to 160 GB/s of throughput.

Cutting back on active resources doesn’t reduce idle power consumption at all, but it does purportedly drop the peak PowerTune limit to 200 W, bringing typical gaming power down to about 140 W (a 50 W reduction compared to Radeon HD 6970). Not a crew to take quantifiable data at face value, we ran our own logged power measurements (available further into the story) and found roughly 42 W separating the 6970 and 6950. That’s close enough to AMD’s specs for us. Physically, the Radeon HD 6970 and Radeon HD 6950 are almost identical. They each measure nearly 11” long—that’s about an inch longer than the Radeon HD 6870 and about half an inch longer than the GeForce GTX 580/570.

The Radeon HD 6970’s power demands necessitate one eight-pin and one six-pin auxiliary connector, while the Radeon HD 6950’s lower clocks make it possible to get away with two six-pin connectors. Otherwise, they both have the same collection of display outputs and the same two CrossFire bridges, enabling configurations of up to four cards working in tandem. As with prior generations, the new Radeons employ the same vapor chamber cooling technology that Nvidia is touting on its latest boards. The result is impressive acoustic performance; in single-card configurations you rarely even hear the thing spin up under load.

One last interesting add-on that AMD chose to integrate is a switch right next to the CrossFire connectors. Both Radeon HD 6900-series cards feature two BIOS files (one locked by AMD, the other available for modding), and flipping that switch swaps between them. Now, most folks will never flash the BIOS on their video card. But for those that do, and for press guys like us who sometimes end up with early firmware that needs to be updated to retail status, this comes in handy. |

Test Setup And Benchmarks |

|

Today's comparison includes one of the largest collections of graphics cards we've ever put into a single piece. Between the general benchmarks and the added CrossFire/SLI numbers, we've included two Radeon HD 6970s, two Radeon HD 6950s, two Radeon HD 6870s, two Radeon HD 6850s, a Radeon HD 5970, a Radeon HD 5870, two GeForce GTX 580s, a GeForce GTX 480, two GeForce GTX 570s, a GeForce GTX 470, and two GeForce GTX 460s. By my count, that's more than 47 billion transistors worth of graphics hardware on the test bench. Much of this is the result of reader feedback, both in the comments sections of my stories, which I monitor as often as time permits, and on Twitter, which I'm able to check more regularly. Keep that feedback coming. Two notable take-aways from the last time around: I've added the GeForce GTX 460s for reference, and I've included more extensive CrossFire/SLI testing with scaling analysis.

|

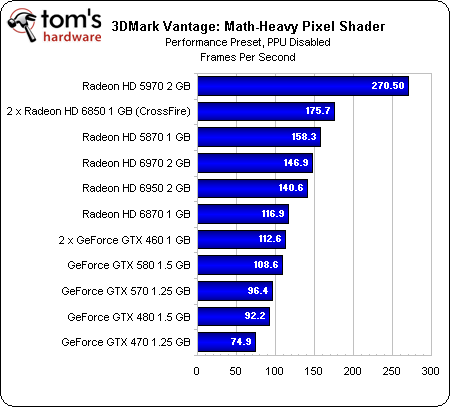

Benchmark Results: 3DMark Vantage (DX10) |

|

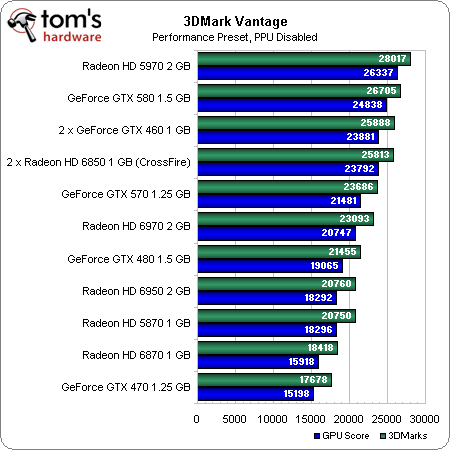

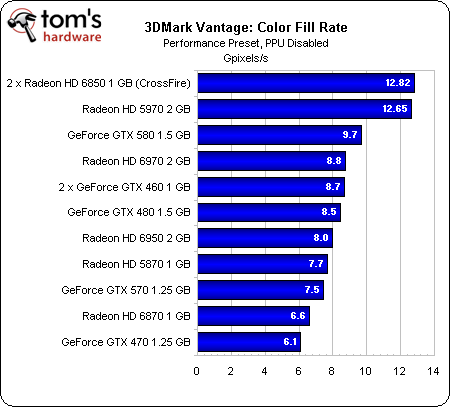

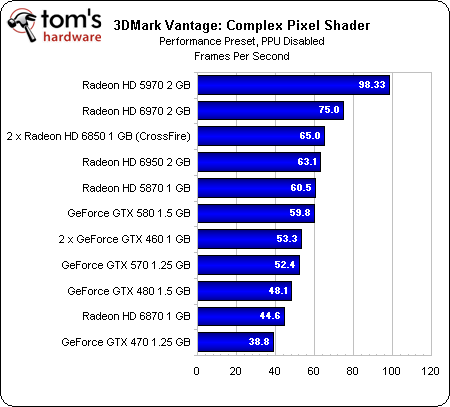

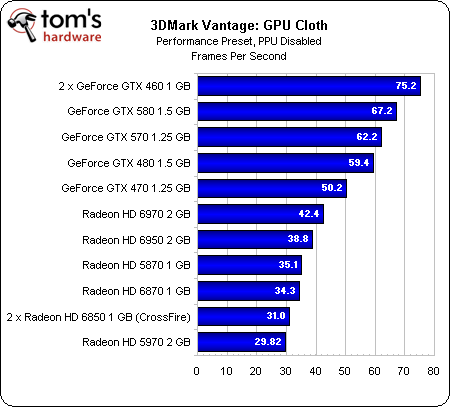

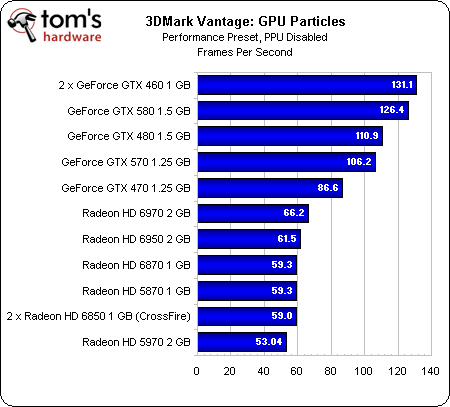

3DMark Vantage gives us an interesting look at synthetic performance. These results reflect AMD’s projected interpretation of the current graphics card market almost exactly. That is to say the Radeon HD 6970 competes with the GeForce GTX 480/570, and the Radeon HD 6950 doesn’t have an exact match in the company’s portfolio. What you’ll find, though, is that the 6950 has a bit of a sibling rivalry going here. AMD’s Radeon HD 5870 remains a very capable piece of graphics hardware priced attractively under $300 (as low as $260 with rebates). In order for the 6950 to be set apart, AMD needs to price it competitively.

|

|

Radeon HD 6970 2 GB |

|

Radeon HD 6950 2 GB |

|

Check out the switch next to the CrossFire link |

|

That's more than 47 billion transistors worth of GPU |

|

Test Hardware |

|

|

Processors |

Intel Core i7-980X (Gulftown) 3.33 GHz at 3.73 GHz (28 * 133 MHz), LGA 1366, 6.4 GT/s QPI, 12 MB Shared L3, Hyper-Threading enabled, Power-savings enabled |

|

Motherboard |

Gigabyte X58A-UD5 (LGA 1366) Intel X58/ICH10R, BIOS FB |

|

Memory |

Kingston 6 GB (3 x 2 GB) DDR3-2000, KHX2000C8D3T1K3/6GX @ 8-8-8-24 and 1.65 V |

|

Hard Drive |

Intel SSDSA2M160G2GC 160 GB SATA 3Gb/s |

|

Graphics |

AMD Radeon HD 6970 2 GB |

|

|

AMD Radeon HD 6950 2 GB |

|

|

Nvidia GeForce GTX 5701.25 GB |

|

|

Nvidia GeForce GTX 580 1.5 GB |

|

|

Nvidia GeForce GTX 480 1.5 GB |

|

|

Nvidia GeForce GTX 470 1.25 GB |

|

|

2 x Zotac GeForce GTX 460 1 GB SLI |

|

|

2 x AMD Radeon HD 6850 1 GB CrossFire |

|

|

AMD Radeon HD 5970 2 GB |

|

|

AMD Radeon HD 5870 1 GB |

|

|

AMD Radeon HD 6870 1 GB |

|

Power Supply |

Cooler Master UCP-1000 W |

|

System Software And Drivers |

|

|

Operating System |

Windows 7 Ultimate 64-bit |

|

DirectX |

DirectX 11 |

|

Graphics Driver |

AMD 8.79.6.2RC2_Dec7 (For Radeon HD 6970 and 6950) |

|

|

AMD Catalyst 10.10d |

|

|

AMD Catalyst 10.10e (For Radeon HD 6850 1 GB in CrossFire) |

|

|

Nvidia GeForce Release 263.09 (For GTX 570) |

|

|

Nvidia GeForce Release 260.99 (For GTX 480 and 470) |

|

|

Nvidia GeForce Release 262.99 (For GTX 580) |

|

Games |

|

|

Lost Planet 2 |

Highest Quality Settings, No AA, 8x MSAA / 16x AF, vsync off, 1680x1050 / 1900x1200 / 2560x1600, DirectX 11, Steam version |

|

Just Cause 2 |

Highest Quality Settings, No AA / 16xAF, vsync off, 1680x1050 / 1920x1200 / 2560x1600, Bokeh filter and GPU water disabled (for Nvidia cards), Concrete Jungle Benchmark |

|

Metro 2033 |

Medium Settings, AAA, 4x MSAA / 16x AF, 1680x1050 / 1920x1200 / 2560x1600, Built-in Benchmark, Steam version |

|

DiRT 2 |

Ultra High Settings, 4x AA / No AF, 1680x1050 / 1920x1200 / 2560x1600, Steam version, Custom benchmark script, DX11 Rendering |

|

Aliens Vs. Predator Benchmark |

Highest Quality Settings, SSAO, No AA / 16xAF, vsync off, 1680x1050 / 1920x1200 / 2560x1600 |

|

Battlefield: Bad Company 2 |

Custom (Highest) Quality Settings, 8x MSAA / 16xAF, 1680x1050 / 1920x1200 / 2560x1600, opening cinematic, 145 second sequence, FRAPS |

|

3DMark Vantage |

Performance Default, PPU Disabled |

|

HAWX 2 |

Highest Quality Settings, 8x AA, 1920x1200, Retail Version, Built-in Benchmark, Tessellation on/off |